7 Ethical Challenges Law Firms Must Solve Before Scaling AI Adoption

Suddenly:

- Drafts appear faster than you expected

- Emails sort themselves

- Research summaries feel almost instant

- Dashboards highlight what needs attention

It feels efficient. Helpful. Even exciting.

Then a few small things give you pause:

- A summary cites a case that doesn’t exist.

- A confidential email gets mislabeled.

- A billing tool flags a perfectly valid entry.

- A client casually asks, “Is AI touching our data?”

None of these moments are catastrophic, but together they reveal a larger truth:

AI isn’t the risk. Moving fast without thinking through the ethics is.

Before a law firm scales AI across teams and workflows, it must answer seven ethical questions. The firms that do this well move faster and safer. The ones that don’t often learn the hard way.

Let’s break down what needs to be solved- clearly, practically, and without technical jargon.

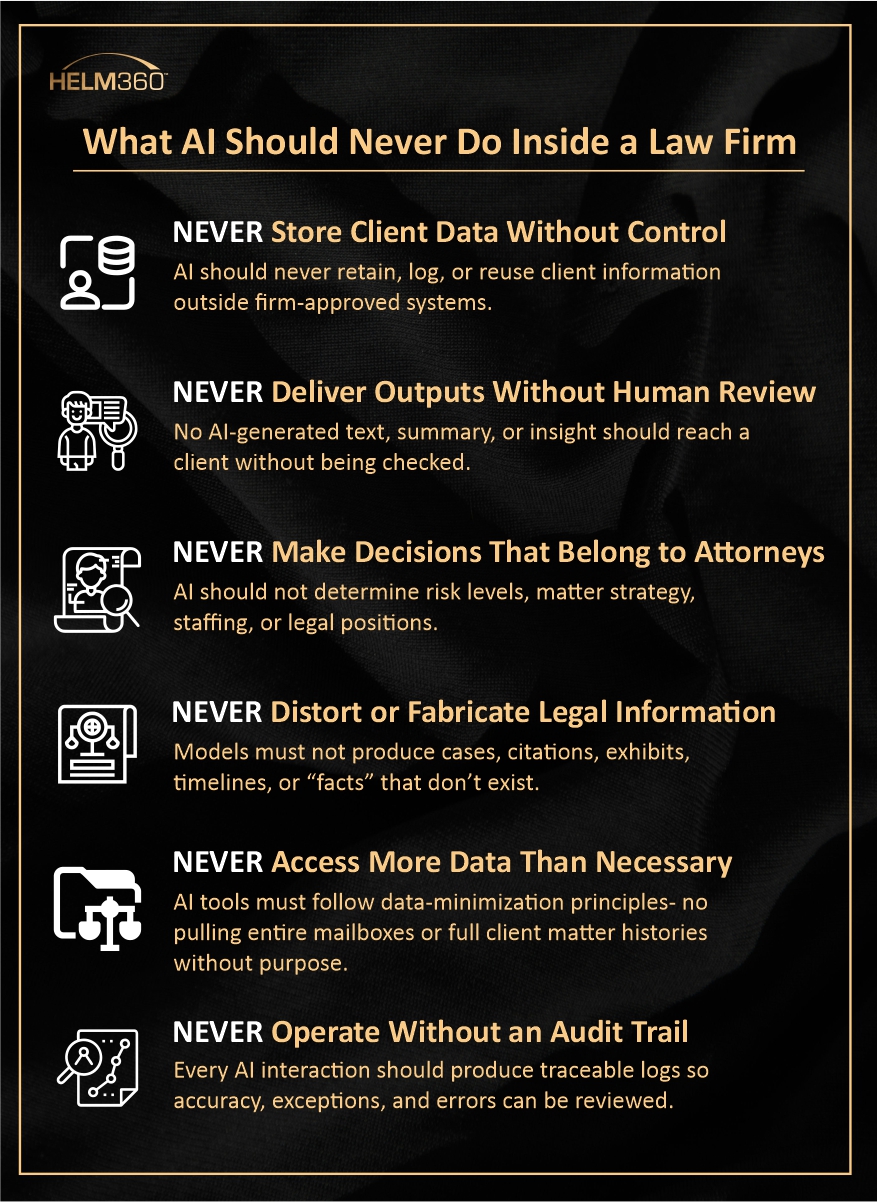

1. Confidentiality: The Hidden Risk Behind Copy-Paste

How do lawyers use AI today?

- A clause gets pasted into a tool to “summarize.”

- A deposition is dropped in to “pull key points.”

- An email thread is fed into a bot to “organize priorities.”

Fast? Yes.

Safe? Not always.

Lawyers often assume AI works like their DMS or Outlook. It doesn’t.

Your text might be:

- Temporarily stored

- Logged for “quality review”

- Processed on third-party servers

- Shared with internal model components you don’t control

Confidentiality used to be simple: keep client data inside the firm.

But with AI, data can move in ways that are not obvious.

Firms need to know:

- Where client data goes

- How long it stays there

- Whether it’s used to train anything

- Who has access to it

If you can’t map everywhere, artificial intelligence touches client information, you can’t guarantee confidentiality.

What good controls look like

- Private, firm-controlled AI models

- Zero-retention settings

- Clear training on what is safe to paste

- Vendor transparency around storage/logging

Good AI saves time.

Responsible AI protects client trust.

2. Bias: When AI Accidentally Replays Your Firm’s History

AI learns from data.

Firm data reflects decades of decisions- good, bad, and inconsistent.

Examples:

- A risk model marks certain industries as “high risk” because older matters were complex.

- A pricing tool undervalues small matters because historically they weren’t prioritized.

AI doesn’t know whether the past was fair or biased.

It simply repeats patterns.

This can quietly affect:

- staffing

- pricing

- client prioritization

- workflow speed

Nothing looks wrong at first.

Nothing looks But slowly, the model pulls your firm back into old habits. wrong at first.

Ethical scaling requires

- audits of model outputs

- diverse human review of “risk” or “priority” scores

- attorneys making final decisions

AI should support judgment, not replace it.

3. Accountability: When “The System” Becomes the Scapegoat

When an AI-driven tool mislabels data or overlooks a conflict, the easiest response becomes:

“The system must have messed up.”

But:

- the system doesn’t attend risk meetings

- the system doesn’t call clients

- the system can’t fix itself

Ethically, firms must answer a simple question:

Who owns each AI tool?

Ownership should be crystal clear:

- a business owner (partner or department head)

- a technical owner (IT/legal ops)

- an escalation path

- authority to pause or override

If AI influences client work, a human must be accountable.

4. Transparency: Clients Don’t Fear AI- They Fear Not Knowing

Clients today are more AI-aware than many firms expect. Most already use AI internally.

They are not afraid of AI.

They are afraid of uncertainty.

They want clarity on:

- how their data is used

- whether humans review outputs

- which workflow steps involve AI

- how mistakes are prevented

- whether AI affects billing

Practical transparency could include:

- a one-page “How We Use AI” overview

- engagement letter language

- talking points for partners and relationship managers

Transparency builds confidence.

Transparency builds confidence.

Silence creates concern.

5. UPL Boundaries: When Automation Sounds Too Much Like Advice

AI can:

- highlight clauses

- summarize documents

- generate suggested language

Helpful? Yes.

Legal advice? No.

Risk arises when:

- AI-generated summaries are sent directly to clients

- contract recommendations sound like strategic guidance

- non-lawyers rely on AI to interpret legal meaning

This is where unauthorized practice of law (UPL) concerns surface.

The fix is simple:

- AI can assist

- Lawyers must advise

- Humans must review before anything leaves the firm

- Clear disclaimers protect boundaries

AI is a brilliant assistant, but it cannot become a silent advisor.

6. Hallucinations: When “Looks Right” Isn’t Right

One of AI’s biggest risks in law is its confidence.

A hallucinated case.

A misquoted statute.

A perfect-looking but wrong timeline.

Everything appears polished.

Everything is incorrect.

The rule of professional responsibility remains unchanged:

If your name is on it, you check it.

To scale AI safely:

- treat AI output like work from a junior associate

- verify everything

- prefer tools that cite sources

- track hallucination patterns

AI can enhance accuracy, but only with human review.

7. People & Culture: The Real Ethics of “Efficiency”

Behind every AI conversation is a quiet human question:

“Will this replace me?”

Support staff worry about automation.

Associates worry about missing early-career learning.

Departments worry about shrinking teams.

Ethical AI adoption requires honesty:

- AI changes roles

- People need support adapting

- Training is essential

- Transparency matters

- Efficiency shouldn’t come at the cost of trust

The firms that succeed with AI are the ones that say:

“We aren’t replacing people. We’re upgrading how they work, and we’ll teach them how.”

That single message transforms adoption.

Where Helm360 Supports Ethical, Scalable AI

Scaling AI inside a law firm isn’t just a tech decision. It’s an operational and ethical one.

Here’s how Helm360 supports responsible adoption:

- Ensuring human oversight central

We help firms design workflows where attorneys and finance leaders stay in control- validating AI-assisted outputs, reviewing exceptions, and making final decisions.

- Protecting confidentiality with secure, firm-controlled environments

Our team helps strengthen governance around data flowing through platforms like Elite 3E and ProLaw so that sensitive information stays protected as AI becomes part of everyday work.

- Helping firms explain AI clearly to clients and leadership

We assist firms in building simple, transparent explanations of how AI supports internal work without compromising legal judgment or ethical standards.

- Helping firms explain AI clearly to clients and leadership

With our digital assistant Termi, attorneys can quickly access billing data, financial insights, documents, and firm knowledge across tools like Outlook, Teams, and SharePoint, and more, without learning new systems.

- Modernizing without disrupting daily operations

Through services such as ProLaw Cloud Hosting and Application Managed Services, we help firms upgrade their environment, stabilize legacy systems, and strengthen day-to-day reliability without interrupting attorney or finance workflows.

AI becomes meaningful only when it’s structured, transparent, and aligned with the way legal teams work.

That’s the kind of foundation Helm360 helps firms build every day.

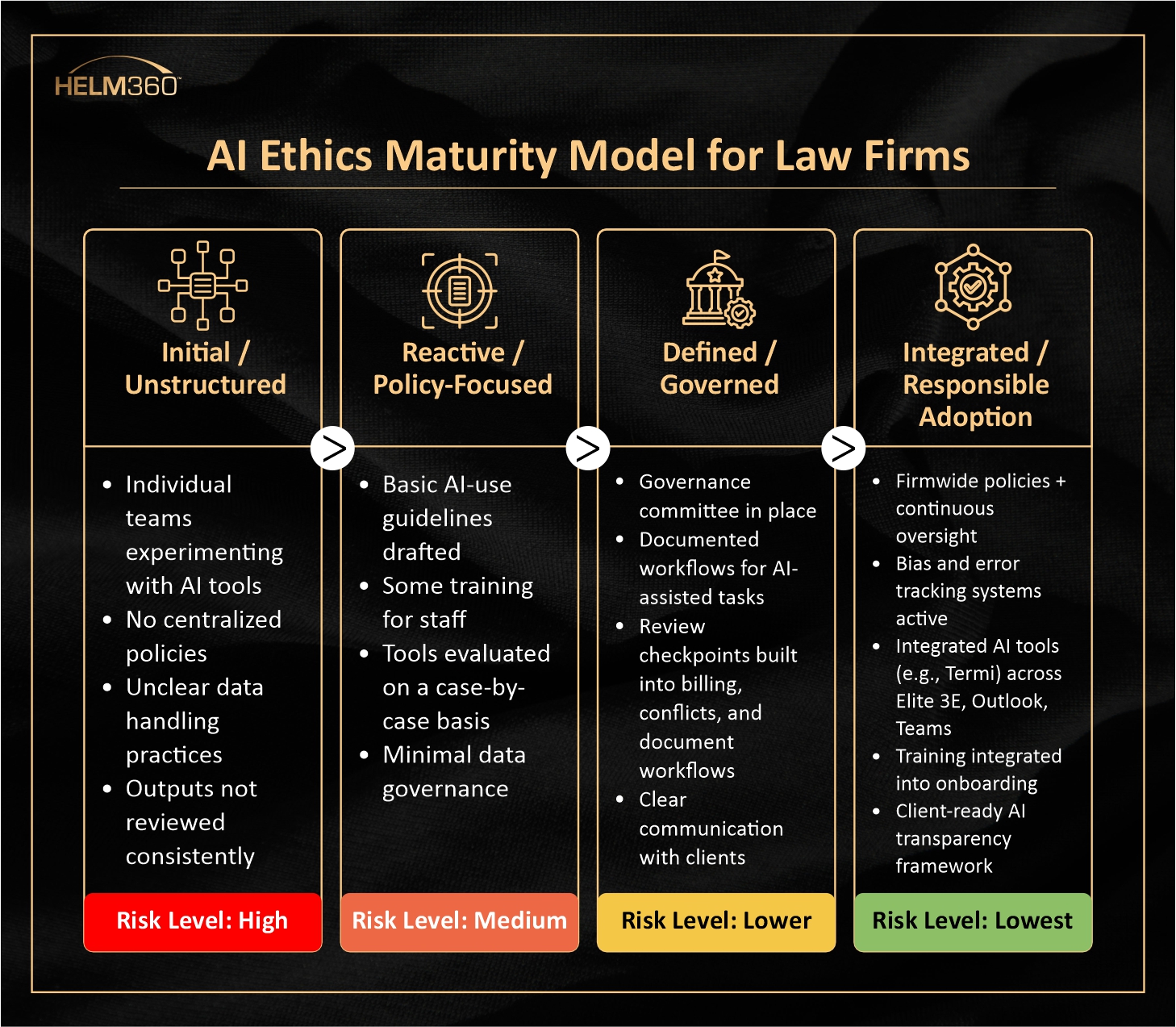

Quick AI Ethics Readiness Checklist

Before expanding AI across the firm, ask:

- Do we know where AI interacts with client or financial data?

- Are owners assigned for every AI-assisted workflow?

- Can we explain our use of AI clearly to clients?

- Do attorneys/staff understand what AI can and cannot do?

- Are we tracking errors, exceptions, and potential bias?

- Do our policies protect confidentiality and our people?

Most firms are still figuring this out.

You’re not alone.

If your firm wants a clearer, safer path to AI adoption, without compromising client trust or internal confidence, our team can help.