Is Generative AI Safe for Law Firms? Key Risks and Controls Explained

Recently, a federal judge in Mississippi made headlines by sanctioning both a lawyer and his client for AI hallucinations in a disability case, holding them jointly liable for opposing counsel’s fees.

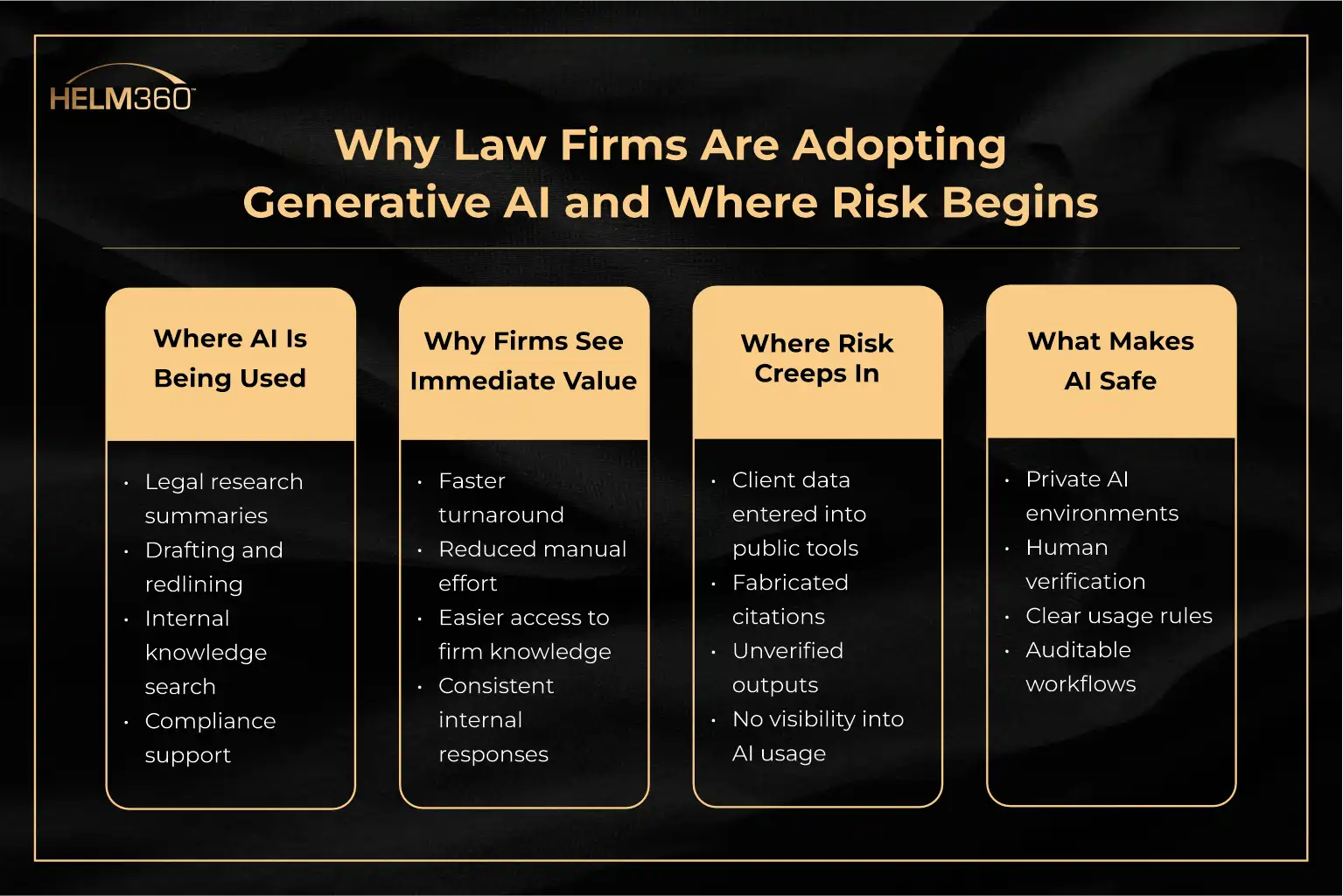

This wasn’t some rookie mistake at a fly-by-night shop. According to recent ABA legal technology surveys, nearly half of larger law firms report experimenting with or adopting generative AI tools. Partners save hours on drafting while associates speed through research. But when AI fabricates case law or leaks client data, your reputation and wallet take the hit.

So, is generative AI safe for your firm? Yes, with the right guardrails.

This guide walks you through real courtroom cases and proven safeguards, showing how law firms can adopt generative AI without compromising accuracy, confidentiality, or professional responsibility.

Risk #1: Data Leakage - When Client Secrets Escape

Imagine your associate rushing a confidential term sheet through ChatGPT for quick analysis. Weeks later, competitor counsel mysteriously references details from your deal during oral argument.

The numbers are alarming. The 2025 legal industry report reveals 31% of legal professionals use generative AI personally, but only 21% of firms have formal AI policies – creating massive shadow AI risk. This gap between individual use and institutional governance is now one of the fastest-growing risk categories for law firms.

Law firms face average breach costs of $5.08 million for professional services as per IBM’s Cost of a Data Breach Report, making proper AI governance essential.

Practical Solutions:

- Deploy private AI instances like Azure OpenAI Service or AWS Bedrock

- Always sanitize inputs by stripping PII before processing

- Use Helm360’s Termi – securely connects to your systems without external data exposure

Risk #2: Hallucinations – AI Fabricates Case Law

The infamous Mata v. Avianca case should serve as a warning to every law firm. Lawyers submitted a brief citing six cases – all invented by ChatGPT. The judge didn’t just strike the filing; he sanctioned counsel and issued a stark warning to the profession.

Stanford HAI study found leading legal AI tools hallucinate 17-33% of the time on legal research queries. Courts across multiple U.S. jurisdictions have now documented a growing number of cases involving AI-generated legal errors, prompting formal warnings and sanctions.

Proven Defenses:

- Implement Retrieval-Augmented Generation (RAG) to ground AI responses in your firm’s verified documents

- Require human verification for all client-facing deliverables

- Built-in verification processes before client delivery

For deeper insight, TLH Episode 40 discusses practical AI ethics boundaries.

Risk #3: Bias - AI's Hidden Ethical Flaws

Generative AI does not only inherit bias from its training data. It can amplify it inside legal workflows without detection. Intake systems can favor certain profiles. Drafting tools can frame legal risk differently. Hiring and staffing recommendations can quietly exclude qualified candidates. These risks create exposure across employment law, client screening, and professional responsibility.

Stanford Law research found that large language models produced different legal advice when only the name in a prompt changed, with worse outcomes tied to race and gender. This makes bias a direct operational and compliance risk for law firms using AI in people-facing decisions.

Practical Solutions:

- Test AI outputs across names, roles, and representative client scenarios before deployment

- Require human review for any AI output used in hiring, staffing, intake, or client risk scoring

- Standardize prompts and restrict free-form AI use in sensitive workflows

- Maintain audit logs of prompts, sources, and outputs to detect and correct bias patterns early

Risk #4: Vendor Dependencies & Regulatory Complexity

Managing partners lose sleep over three harsh realities: sudden AI vendor price hikes, uncertainty about owning AI-generated work product, and looming disclosure requirements.

Law firms widely report IP ownership fears with AI outputs. Most standard vendor contracts fail to address these AI-specific risks, leaving firms exposed to costly algorithm errors.

Strategic Solutions:

- Negotiate explicit IP ownership clauses covering all AI-generated deliverables

- Architect hybrid cloud/on-premises deployments for maximum flexibility

- Partner with Helm360 Application Managed Services for seamless compliance across Intapp, Elite 3E, and AI layers

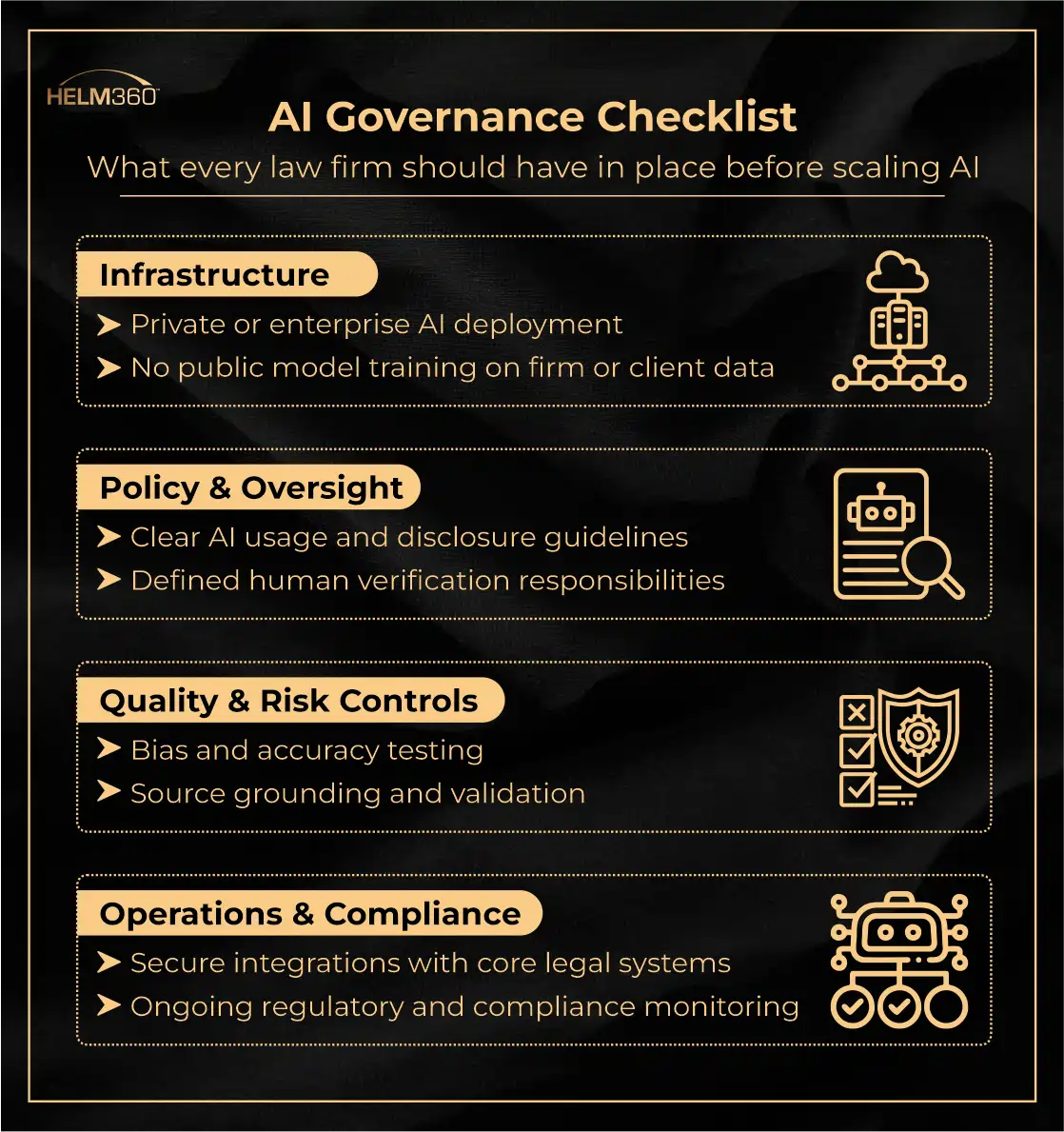

Building an AI-Safe Law Firm: A Practical Roadmap

Law firms that succeed with generative AI don’t treat it as an ad-hoc productivity tool.

They introduce AI in stages, balancing innovation with confidentiality, ethical responsibility, and regulatory expectations.

Below is a practical, phased roadmap many firms are following today.

Phase 1: Pilot Safely (0–3 Months)

Focus on controlled experimentation without client exposure.

- Limit AI use to non-client, low-risk tasks such as anonymized research and internal drafting

- Avoid public AI tools; use private or enterprise AI environments only

- Prohibit uploading client data, PII, or confidential documents

- Document basic AI usage guidelines covering confidentiality and verification

Phase 2: Policy, Training & Guardrails (3–6 Months)

Move from experimentation to governance.

- Roll out a formal firm-wide AI usage policy

- Train attorneys and staff on ethical boundaries, hallucination risk, and human verification

- Introduce role-based AI access with built-in safeguards

- Conduct regular accuracy and bias reviews, aligned with frameworks like NIST AI RMF

Phase 3: Scale with Confidence (6–12 Months)

Expand AI only after controls are proven.

- Extend AI to client-facing research and drafting, with mandatory human review

- Integrate AI into practice management and financial systems (e.g., Intapp, Elite 3E)

- Maintain audit trails, access controls, and usage logs

- Monitor evolving regulatory requirements and client expectations

The Bottom Line: AI Is Safe When Law Firms Stay in Control

Generative AI is already embedded in legal work. Research moves faster. Drafting feels lighter. Teams expect efficiency.

What firms are learning the hard way is this:

- AI doesn’t reduce responsibility. It concentrates it.

- Courts, regulators, and clients don’t evaluate how advanced your tools are. They evaluate outcomes. Accuracy. Confidentiality. Professional judgment.

- Firms that govern AI deliberately protect trust and flexibility.

- Firms that don’t are left explaining mistakes after the fact.

The difference isn’t adoption speed. It’s control.

What Smart Law Firms Do Next

Leading firms don’t panic, and they don’t pause innovation. They put structure around it.

- They replace public AI tools with secure, private environments

- They require human verification before anything reaches a client

- They align AI workflows with existing legal and financial systems

- They treat AI governance as ongoing, not a one-time setup

This approach allows firms to benefit from AI without creating silent risk.

Final Thought: Bring AI Under Control

Generative AI is not the risk.

Uncontrolled generative AI is.

Law firms that move early stay in control of how AI supports their work, protects client data, and fits within professional responsibility. Firms that delay often end up responding after issues surface.

The difference is governance.

Clear usage rules. Human verification. Secure access to firm knowledge. Systems that remain auditable and accountable.

Firms that govern AI deliberately reduce exposure while achieving meaningful productivity gains without compromising trust or standards.

Bring AI under control at your firm.

Talk to Helm360 about enabling governed, law-firm-ready AI through secure internal knowledge access and managed legal technology platforms.