Termi powered by Digital Eye: Helping law firms harness GPT accurately and reliably

Generative AI is quickly becoming a go-to business tool. Who doesn’t love the way ChatGPT returns fully fleshed out ideas seconds after you feed it a simple prompt? It’s so magical! However, the primary sticking point with the GPT model is relevancy and validation: Are ChatGPT’s results accurate, true, and relevant?

We’ve all heard tales of ChatGPT making things up. In the landscape of the large language model (LLM), this is called hallucination. It occurs because OpenAI’s LLM isn’t concerned about creating a source of truth. It’s focused on collecting as much data as possible, accurate or inaccurate. This is a major problem for real-world deployments, where we expect accurate and reliable information.

Here at Helm 360, we’ve been putting a lot of thought into how we can help law firms leverage this incredible technology in a controllable way that alleviates the uncertainty and potential liabilities associated with the current framework.

We were pleased to discover that we have the building blocks for a viable solution on hand: our AI-enabled chatbot, Termi, and our automated data discovery tool, Digital Eye. Here’s how we’ve combined these two next generation tools to create a unique solution to the GPT hallucination conundrum.

Step #1: Leverage firms’ unstructured data

As mentioned above, the primary challenge the GPT model presents for law firms is accuracy. Is the information it’s returning true? It sounds true. It looks true. But unless you take time to verify the results, you can’t accept them as true. That’s not helpful, especially in the legal sector.

However, the GPT model’s ability to scan large amounts of data and quickly return a result has the potential to be incredibly handy. That functionality definitely could benefit attorneys and boost their efficiency.

When we considered GPT’s accuracy problem in terms of tools we already have available, we quickly realized it wasn’t GPT that was the hurdle, but its LLM. Remember, OpenAI’s GPT model runs on an immense LLM. Think of it as a Great Lakes-sized data repository. There’s so much information you can’t see the other side. And the inputs are coming from everywhere: data streams, rivulets, and full-blown rivers. And just like the water going into the Great Lakes, the data isn’t always pure and consumable.

We needed an LLM with truthful, accurate data.

This is where your firm’s unstructured data comes in.

Every law firm has vast amounts of unstructured data. By unstructured, we mean data without a predefined structure, such as text documents, PDFs, emails, image files, etc. This is data containing knowledge about the firm, policy information, and other valuable information that reveals a lot about the firm’s workings. And it’s all accurate.

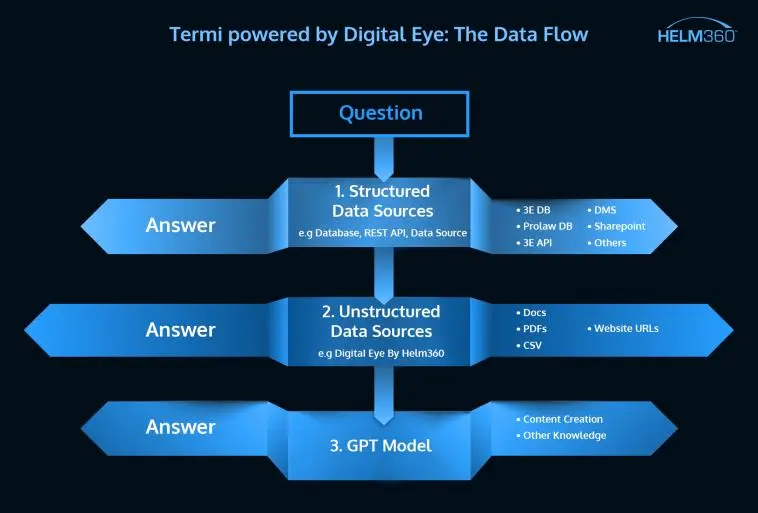

Creating this proprietary LLM changes your firm’s data landscape by giving you a new accessible layer. The result is a three-tiered data structure:

- Structured data, such as the 3E database, ProLaw database, DMS, etc.

- Proprietary LLM made up your firm’s unstructured data, like emails, text documents, images, etc.

- OpenAI’s GPT model, which sources from the larger, unregulated LLM.

This structure does two things:

- It allows firms to get more mileage out of their unstructured data.

- It creates a reliable mini-GPT model with a source of truth.

Step #2: Expand Digital Eye’s functionality

We originally created Digital Eye to improve data quality and automate data discovery. However, its ability to scan large amounts of data quickly makes it a perfect fit for the next step in our generative AI solution.

We added new functionality to Digital Eye that combines the power of LLMs with the context of unstructured data. This allows Digital Eye to not only look for data anomalies as it was originally intended, but to also detect exact query matches within your firm’s LLM.

For example, if you’re a large firm with multiple locations, each with its own employee handbook, users typically have to identify the right handbook and then scroll through said handbook looking for the answer to their question, like “what are my maternity leave options.” The handbook (unstructured data) is in an electronic format, but it still takes time to find the needed information; the data isn’t exactly handy.

However, if your firm has this three-tiered data structure with a proprietary LLM, the data in the employee handbooks is accessible to Digital Eye. The tool scans the documents and returns an accurate, reliable result to the exact query in seconds.

Digital Eye can also act as a gatekeeper between your firm’s known data landscape and OpenAI’s LLM. Digital Eye’s parameters can be set to alert users when their requested result can’t be found in the firm’s two sources of truth: the structured data and the proprietary LLM. The tool gives the user a big “hey! you’re entering the OpenAI unknown!” alert so the user knows they’re moving into the realm of questionable results.

Step #3: Top it off with Termi

All of this back-end wizardry is useless if the front-end isn’t user-friendly, which brings us to Termi.

Termi is our AI-enabled chatbot designed specifically to be attorney-friendly. We created it to make accessing a firm’s structured data, like WIP, time entry, customer information, etc., quick and easy. Termi employs a plain English question and response format that makes using it intuitive and approachable.

In our current scenario, you use Termi to answer natural language questions, generate text, and create other types of content via the Digital Eye API. And, as with many AI-enabled tools, Termi “learns” common questions and language specific to your firm as its knowledge library grows.

Real world examples

This Termi powered by Digital Eye system creates a powerful generative AI-based tool with a foundational source of truth that lays the groundwork for a wide array of real-world applications.

Here are three specific use case examples:

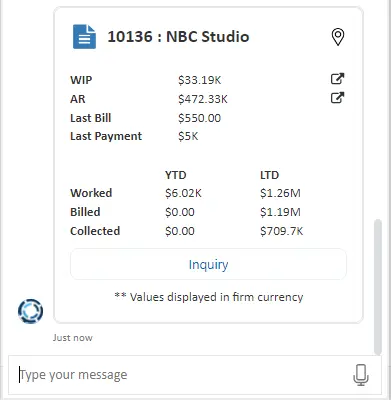

- Questions for your structured data repository

- The attorney prompts Termi: Give me a client summary for NBC Studio.

- Result: Termi sources the results from the firm’s PMS system (known data) and returns the results directly to the attorney via Termi’s chatbot interface.

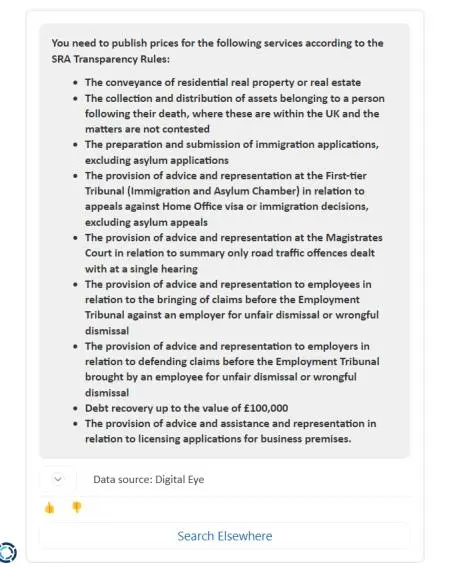

- Questions for your unstructured data (proprietary LLM)

- The attorney asks Termi: What services do I need to publish prices for according to the SRA?

- Result: Digital Eye scans the firm’s proprietary LLM for information specific to this topic, factual and contextual, and returns an accurate result that Termi delivers in its interface.

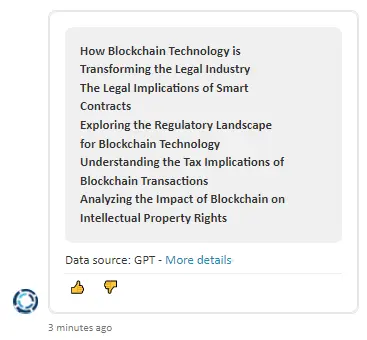

- Questions for GPT

- The attorney prompts Termi: Termi, give me five blog topics based on the legal implications of blockchain technology.

- Result: Termi scans your structured data, then moves the request to Digital Eye, which scans the firm’s LLM. Finally, the request is sent to GPT, which reaches into OpenAI’s LLM and returns the results via Termi’s interface.

This all happens whenever and wherever your attorneys need it; Termi is mobile and device friendly. So not only does this solution streamline accessing and using data, but it also aligns this usage process with your attorneys’ daily workflow allowing them to stay on track and productive.

Parting thoughts

Bringing new technology into your firm’s landscape always comes with uncertainty: Will it work? Will it produce ROI? Will it be used? Will it integrate with the current landscape? The list goes on. We get it. But generative AI is seeping into our culture. Your attorneys are already using it. The question isn’t whether generative AI poses a risk to your firm, but how you develop the means and culture to mitigate its risk now while the technology is still in its infancy.

Termi powered by Digital Eye gives you a powerful solution that puts your firm ahead of the generative AI hallucination problems while allowing your attorneys to tap into the GPT model’s benefits. By combining the power of LLMs with the context of unstructured data, this unique, next generation solution drives data-driven decision-making, improves content creation, and leverages the GPT model safely.

Want to see Termi powered by Digital Eye in action? Contact us! We’re happy to give you a free demo.